Dr. Josh Harguess, AI Security Chief

“Red teaming” and “AI red teaming” are two approaches used in security and assessment practices to test and improve systems. While traditional red teaming focuses on evaluating the security of physical and cyber systems through simulated adversary attacks, AI red teaming specifically addresses the security, robustness, and trustworthiness of artificial intelligence systems. Here are five main differences between these two approaches:

- Objective Focus:

- Traditional Red Teaming: Aims to identify vulnerabilities in physical security, network security, and information systems. It simulates real-world attacks to evaluate how well a system can withstand malicious activities from human attackers.

- AI Red Teaming: Focuses specifically on the vulnerabilities inherent in AI and machine learning (ML) models. This includes assessing the model’s resistance to adversarial attacks, data poisoning, and exploring how AI decisions can be manipulated.

- Methodology and Techniques:

- Traditional Red Teaming: Uses techniques such as penetration testing, social engineering, and physical security assessments. The methods often mimic those that a human attacker might use.

- AI Red Teaming: Employs adversarial machine learning techniques, including generating adversarial examples (subtly modified inputs that cause AI to make mistakes), data poisoning, and model inversion attacks. These methods are specific to the way AI systems process information.

- Expertise Required:

- Traditional Red Teaming: Requires a broad range of cybersecurity and physical security expertise. Practitioners need to understand network architecture, software vulnerabilities, and human factors in security.

- AI Red Teaming: Requires deep knowledge of machine learning algorithms, data science, and the specific ways AI systems can be tricked or misled. Practitioners often have a background in computer science with a specialization in AI.

- Attack Vectors:

- Traditional Red Team: Focuses on exploiting weaknesses in software, hardware, and human elements of security systems. The attack vectors are diverse, including phishing, brute force attacks, and physical breaches.

- AI Red Team: Concentrates on exploiting the mathematical and algorithmic vulnerabilities in AI systems. Attack vectors are more specialized, involving manipulating the input data or exploiting the model’s logic to cause incorrect outputs. Additionally, the attack surface for AI is currently several magnitudes larger than traditional cyber systems because 1) there has been less successful work on constraining the AI attack surface and 2) AI performance is much more sensitive to various mitigation strategies that have tried to reduce the attack surface.

- Outcome and Remediation:

- Traditional Red Teaming: Outcomes often lead to recommendations for strengthening network defenses, improving security protocols, and enhancing physical security measures.

- AI Red Teaming: Results in recommendations for improving the robustness of AI models against adversarial attacks, enhancing data integrity, and implementing safeguards in AI decision-making processes.

Overall, while both traditional and AI red teaming aim to improve system security, they differ significantly in focus, methodologies, required expertise, attack vectors, and the nature of their remediation strategies. AI red teaming is a specialized subset that has emerged in response to the unique challenges posed by AI technologies.

In addition to the primary differences already outlined, there are a few more nuanced distinctions between traditional and AI red teaming that are worth mentioning:

Scalability and Automation:

- Traditional Red Teaming: While aspects of traditional red teaming can be automated, such as vulnerability scanning, much of it relies on human creativity and adaptability to simulate realistic attack scenarios. The process can be labor-intensive and might not scale easily across different systems without significant adaptation.

- AI Red Teaming: Given the digital and algorithmic nature of AI systems, AI red teaming can often leverage more scalable and automated techniques. For instance, generating adversarial examples can be automated once an effective method is devised for a specific model, allowing for large-scale testing.

Dynamic Adversarial Tactics:

- Traditional Red Teaming: Adversaries may change their tactics based on human judgment and situational awareness. This requires red teams to continuously evolve their strategies to simulate realistic threats effectively.

- AI Red Teaming: The adversarial tactics against AI systems often involve understanding and exploiting specific algorithmic vulnerabilities. As AI technologies evolve, so do the techniques for attacking them, which can lead to a rapid arms race between developing defensive measures and new forms of attacks.

Ethical and Legal Considerations:

- Traditional Red Teaming: Involves legal and ethical considerations, especially regarding privacy, data protection, and the potential for collateral damage. These activities must be carefully planned to avoid unintended consequences.

- AI Red Teaming: While also subject to ethical and legal considerations, the focus includes the potential biases introduced by adversarial attacks, the misuse of AI through manipulated models, and ensuring that AI behaves reliably under adversarial conditions without causing harm.

Impact Assessment:

- Traditional Red Teaming: The impact of vulnerabilities is often assessed in terms of potential data breaches, financial loss, and damage to physical infrastructure or human life.

- AI Red Teaming: Impact assessment includes evaluating the potential for incorrect decisions by AI systems, which could lead to reputational damage, biased outcomes, or unintended ethical implications.

Countermeasure Complexity:

- Traditional Red Teaming: Countermeasures might involve enhancing security protocols, updating software, and physical security improvements. These solutions are generally well-understood and can be directly implemented.

- AI Red Teaming: Developing countermeasures against AI vulnerabilities can be more complex, involving not just technical fixes but also adjustments to the model’s training data, algorithmic changes, and continuous monitoring for new types of adversarial attacks.

These additional points underscore the complexity and evolving nature of AI red teaming compared to traditional red teaming, highlighting the need for specialized knowledge and techniques to secure AI systems effectively.

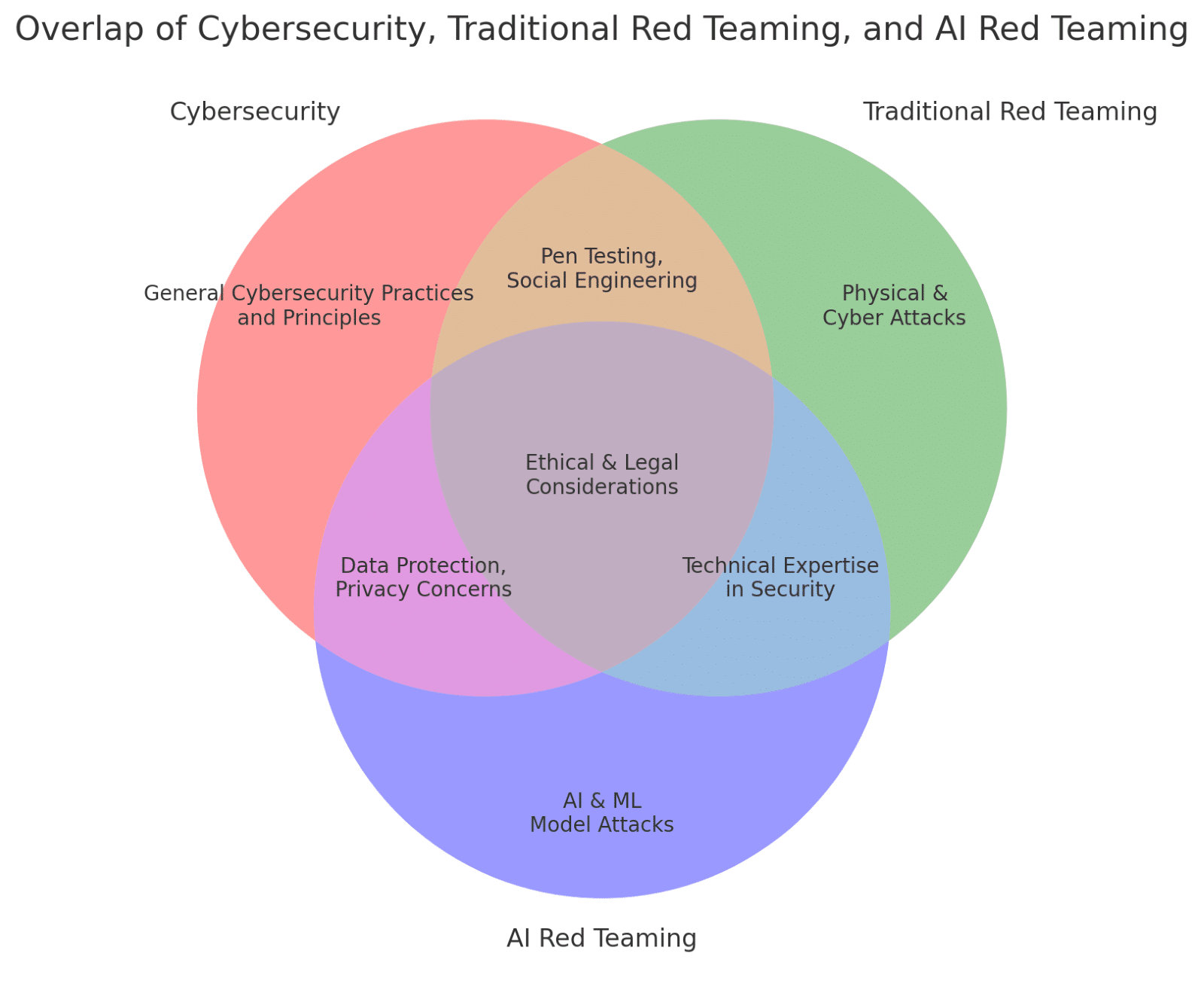

The Venn diagram above illustrates the overlap among cybersecurity, traditional red teaming, and AI red teaming. It highlights the unique and shared aspects of each field:

The Venn diagram above illustrates the overlap among cybersecurity, traditional red teaming, and AI red teaming. It highlights the unique and shared aspects of each field:

- General Cybersecurity Practices and Principles: Fundamental to all areas, focusing on protecting systems, networks, and data from digital attacks.

- Physical & Cyber Attacks: A focus of traditional red teaming, involving simulated real-world attacks on both physical and cyber aspects.

- AI & ML Model Attacks: Specific to AI red teaming, concentrating on vulnerabilities within artificial intelligence and machine learning models.

- Pen Testing, Social Engineering: Techniques used in both cybersecurity and traditional red teaming to evaluate the human and technical vulnerabilities.

- Data Protection, Privacy Concerns: Common to both cybersecurity and AI red teaming, emphasizing the importance of safeguarding data and maintaining user privacy.

- Technical Expertise in Security: Essential for both traditional and AI red teaming, requiring specialized knowledge to identify and exploit vulnerabilities.

- Ethical & Legal Considerations: At the intersection of all three fields, highlighting the importance of conducting assessments within ethical and legal boundaries.

In summary, cybersecurity serves as the foundational framework encompassing both traditional and AI red teaming, each addressing distinct aspects of security vulnerabilities and defense mechanisms. Traditional red teaming focuses on identifying and exploiting weaknesses in physical and cyber systems through techniques such as penetration testing and social engineering, leveraging broad cybersecurity and physical security expertise. In contrast, AI red teaming delves into the specific vulnerabilities of artificial intelligence and machine learning models, employing adversarial techniques to test the robustness of AI systems against manipulated inputs and attacks. Both approaches require a deep understanding of their respective domains, including technical expertise in security protocols, ethical and legal considerations, and a commitment to enhancing data protection and privacy. The intersection of these fields underscores the importance of a comprehensive security strategy that addresses the wide range of potential threats in today’s increasingly digital and AI-driven world, highlighting the need for specialized knowledge and techniques to safeguard against both conventional and emerging threats.

About Cranium

Cranium is the leading enterprise AI security and trust software firm, enabling organizations to gain visibility, security, and compliance across their AI and GenAI systems. Organizations can map, monitor, and manage their AI/ML environments against adversarial threats without interrupting how teams train, test, and deploy their AI models through its Cranium Enterprise software platform. The Cranium platform also allows organizations to quickly gather and share information about the trustworthiness and compliance of their AI models with their third parties, clients, and regulators. Cranium helps cybersecurity and data science teams understand that AI impacts their systems, data, or services everywhere. Secure your AI at Cranium.AI.